Human+AI interactions

Artificial intelligence (AI) became pervasive and is blending in with our lives and habits without us noticing it. Although AI is very powerful, companies don’t know what would be its impacts on society, and people don’t know how to interact with it. The purpose of this research is to understand the user experience principles of AI-infused products and define responsible AI guidelines for all its practitioners.

See the publications & meet the team

Fairness

Machine learning models identify pattern in data. Their major strength is the capability to find and discriminate classes in training data, and to use those insights to make predictions for new, unseen data sets. However, there is growing concern about their potential to reproduce discrimination against a particular group of people based on sensitive characteristics such as gender, race, religion, or other. In particular, algorithms trained on biased data are prone to learn, perpetuate or even reinforce these biases. The purpose of our research is to measure unwanted bias and to find solutions to mitigate it.

See the publications & meet the team

Interpretability

The widespread usage of Machine Learning and Artificial Intelligence in our society requires a high level of transparency to ensure that practitioners and users alike, are aware of how and why systems behave the way they do. The team leads a research effort to improve Machine Learning Interpretability with the aims of: (I) avoiding black-box predictions and (II) improving the quality of Machine Learning models.

See the publications & meet the team

Computer Vision

State-of-the-art Computer Vision will improve our internal processes, thus allowing a faster and more accurate action. Be it document understanding for claims processing, flood detection for real-time customer support, or object detection for improved risk understanding, these algorithms are transforming the way we work.

See the publications & meet the team

Quantum

Quantum Computing (QC) is nascent technology that whilst being very hyped because of its complexity some aspects can be confusing. This is because there are many different kinds of technologies behind QC and even more possible applications, behind each one of these are countless organisations trying to label their QC technology as the best. We collect all available information and sort through it to find which parts are viable and scalable so an applicable QC framework can be built to use in the future.

See the publications & meet the team

Regulation

Today the question of how the development of technology — and AI in particular — should be governed remains at the forefront of public debate. International organizations, national and local governments but also a growing number of firms already designing and using artificial intelligence (AI) recognize the need for guiding principles and a model for governance. The team works on analyzing the different principles shaping and guiding the ethical development of AI uses.

See the publications & meet the team

Mobility/Safety/IoT

Urban mobility has already a considerable impact on our quality of life and safety. On the other hand, novel approaches as urban sensing, multi-modal and intelligent transport systems, and smart cities, are offering a more sustainable mobility and new services. Our team emphasizes that the right mix among machine learning, IoT, graph theory, and networking will lead to a better safety and mobility orchestration for public transportations, private drivers, autonomous and connected vehicles, and pedestrians.

See the publications & meet the team

Robustness

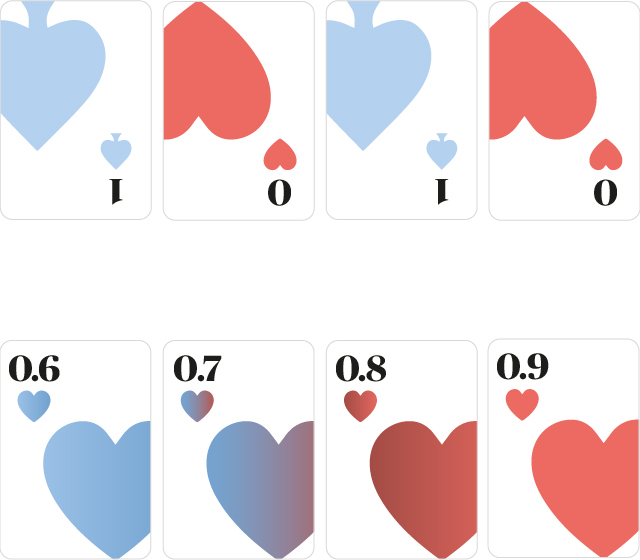

Machine learning (ML) algorithms are now used to tackle important problems such as medical diagnosis. However, they are still not robust to modified inputs such as adversarial examples and are often overconfident about their own performance. This is an important issue as the confidence associated with a prediction is often used to decide whether the prediction can be trusted or not. Thus, our research aims at improving ML robustness and at finding ways to better assess the confidence provided in order to reliably detect misclassification.

See the publications & meet the team